Data management tool for ML Engineers E2E

DagsHub | Lead Product Designer | 2023–2024

Learning a new role and domain

When I stepped into my role in 2022 — just before the initial AI boom of early 2023 — I entered an evolving domain with no established industry standards. AI development tools were rapidly advancing, and with that came inevitable growing pains. In many ways, I was growing alongside them.

Transitioning from a structured, six-person product team at a large company to being the solo designer at a startup was both a challenge and an opportunity.

Through this project, I faced two major challenges:

- Navigating a new domain with no established UX patterns

- Designing a foundational product experience without a clear set of requirements

How do ML Engineers work with data?

The Data Engine feature was initiated as a product decision to pivot the company’s efforts towards data management.

In conversations with potential customers, we consistently heard that managing data was one of the most challenging components in the work of a Data Scientist/ML Engineer. Their current tools were difficult to use — often adapted from general-purpose cloud storage or internal scripts.

Especially when they have models in production and a constant need to gather, curate and maintain pipelines of data for their machine learning projects.

Key challenges surfaced repeatedly:

- Difficulty exploring large volumes of multimodal data (images, audio, tabular)

- No easy way to track which dataset was used in which model version

- Lack of visual tools to browse, curate, or query datasets

At the same time, the feature was new and needed to integrate seamlessly with other platform components such as code, experiments, and annotation workflows.

Using design prototypes to navigate the ambiguity

At the beginning, the definition of the feature was very broad and vague. Given the scale and the extensive technical effort needed, my questions at the initial stage of the project remained unanswered, both from the dev leads and product stakeholders. At that point I could not gather a lot of information on the details of the implementation too.

Some of the questions I had in mind:

What kind of filters would we like to develop?

What are the requirements for them (e.g. input-based, range-based, time/date based, etc.)?

How does the metadata look like? Are there specific categories? Is there 1-1 relationship between the metadata field and the value? etc.

We had to move fast, and even without my questions answered, we needed to test our product hypothesis with potential customers.

My approach was to lean on my previous experience working with library catalog databases and search experience (see NLI search redesign), regardless of the yet-to-be defined requirements and specs, and refine and iterate on the way.

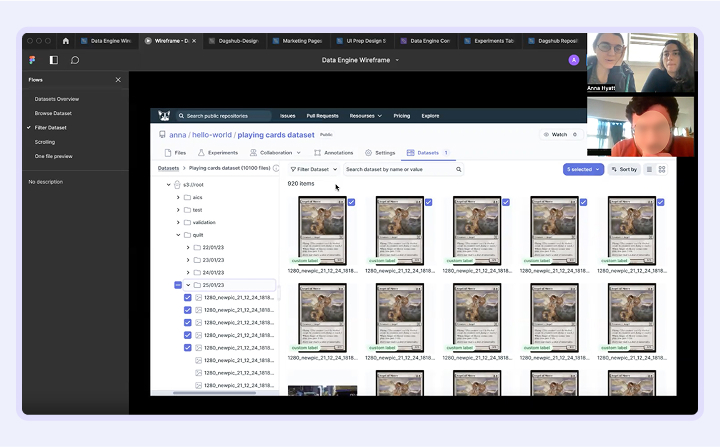

The first design versions were mock prototypes. Thanks to the Design System I established by that time, I was able to create them with a lightning speed.

The prototypes served as both a demo for customer calls and an anchor for internal discussions . It helped reveal alignment gaps, technical limitations, and user expectations far earlier than a traditional spec.

One of the early design prototypes

When filters fail: an insight from domain users

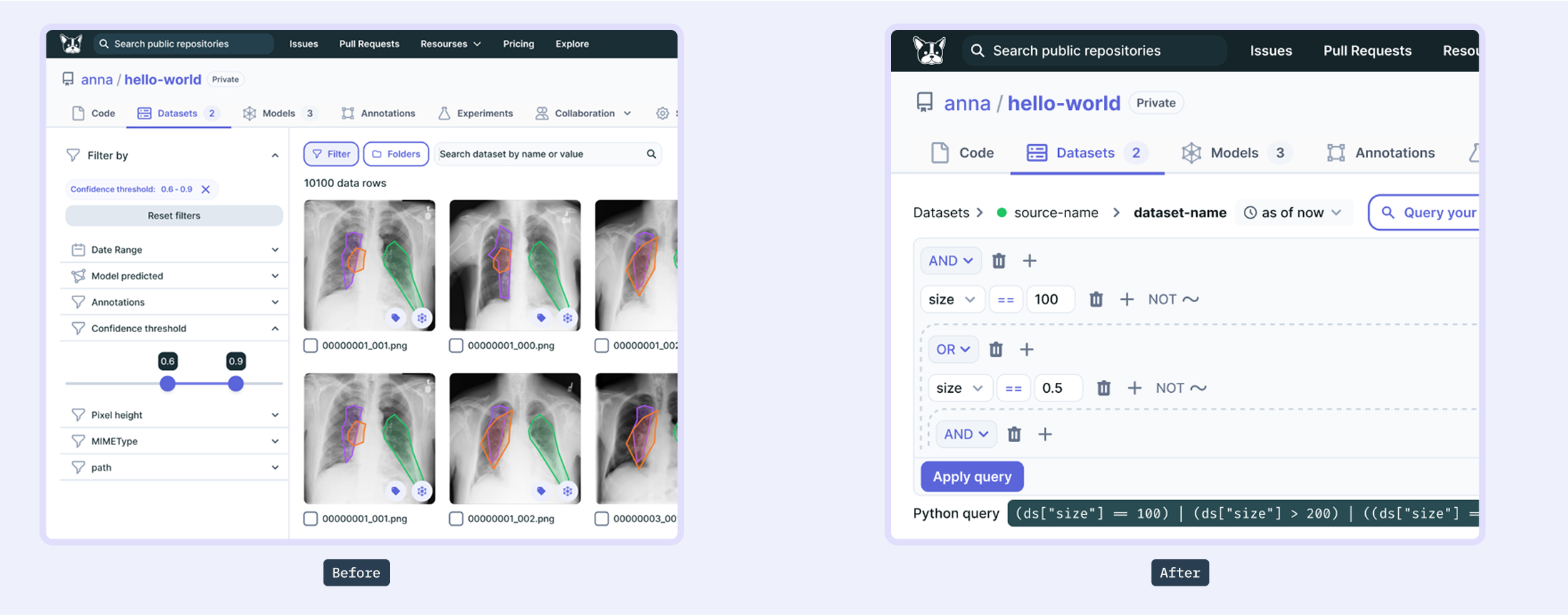

My prototype originally featured sidebar filters — a pattern I had used effectively in the past. But during interviews with ML engineers, I noticed a misalignment.

“I would like to be able to write my query right here, in the search field: most of researches work is via programming, it will be more natural for them to use”

I realized the filter UI model was misaligned with how our users conceptualize data: as code, not categories. Instead of using common sidebar filters, users wanted an input field, where they could just type in their query! Saves so much real estate, and very clear.

Feedback session with a potential customer using my prototype

Shifting to a query builder model

With this insight, I got back to the team, but their response was:

Implementation of such thing is unrealistic in a foreseeable future: it requires complex validation scenarios: syntax, formatting, etc.

So I got back to the drafting table and reworked the UI to reflect both the mental model of our users AND the technical constraints.

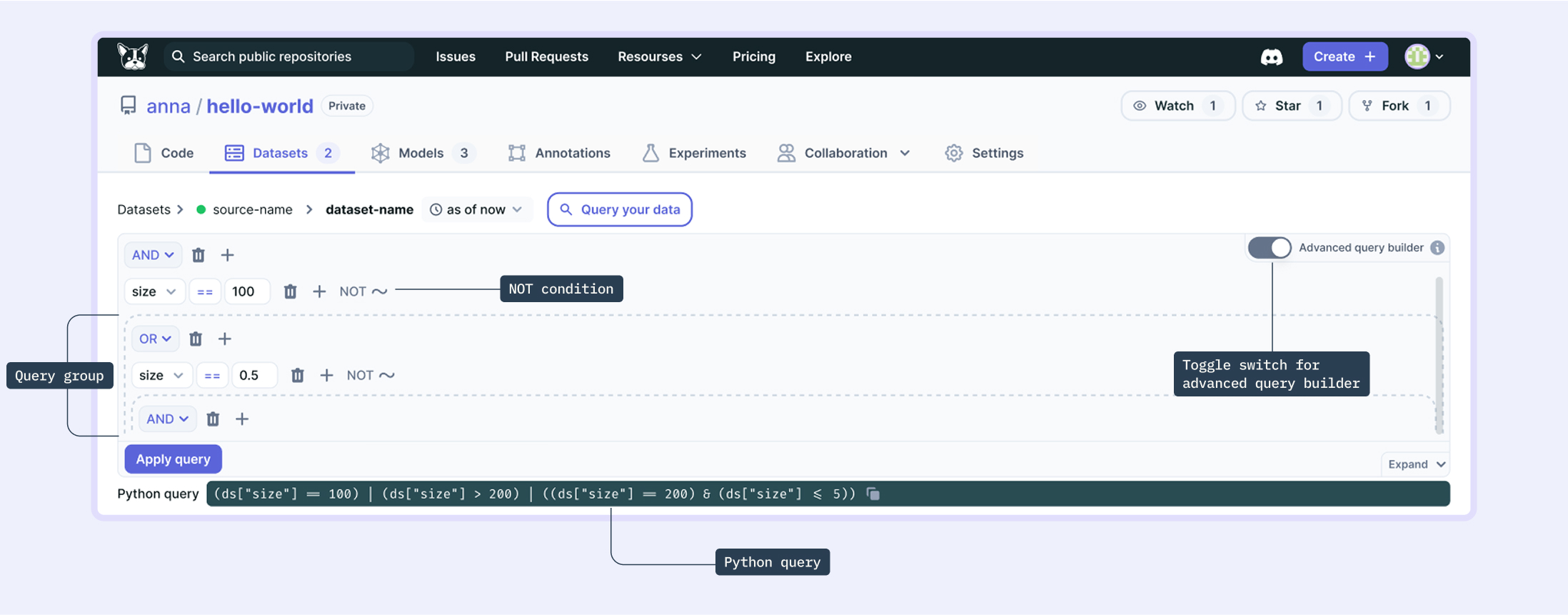

The revised interface introduced:

- A visual query builder, allowing users to define SQL conditions using the UI, that would be easily translatable into back-end query

- AND, OR, NOT conditions, and grouping

- As an addition, I suggested adding a snippet with a python query (the syntax aligns with the tools ML engineers already use, enabling smooth integration with their workflows and minimizing context switching)

This approach preserved usability for less technical users (such as Annotators, for example), while offering precision and familiarity for experienced ML engineers.

Post-launch feedback confirmed we were on the right track:

“It’s great that the query is fully translatable between UI and code.”

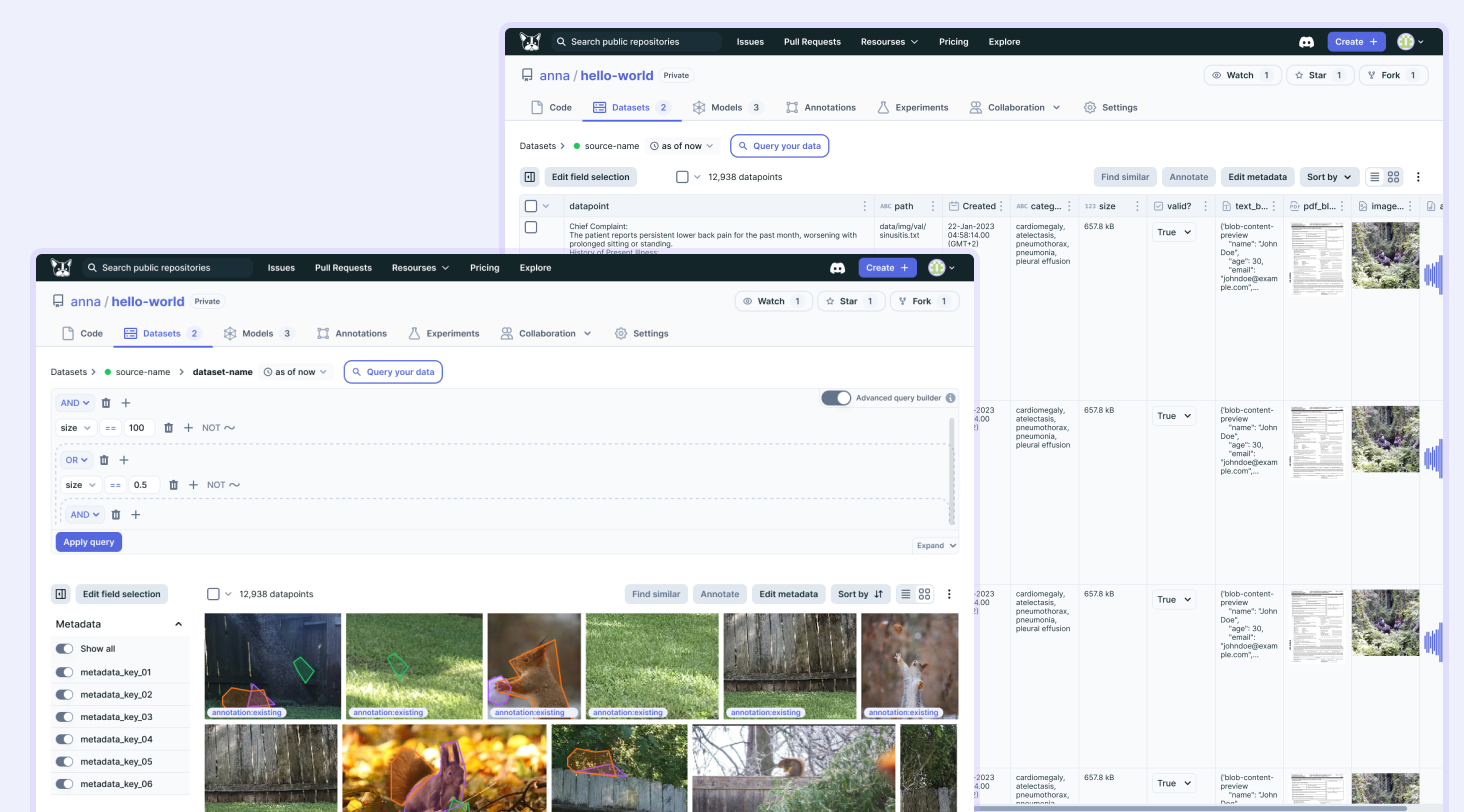

Working within constraints: designing an API-first MVP

Our engineering capacity prioritized backend and API development, so the UI had to be lightweight in the first release. Much of the actual logic-building happened via API calls, and the frontend became at first visualization and query composition layer.

I adjusted the design scope accordingly — defining a reduced MVP version that still delivered user value and provided the foundation for later expansion.

API-first MVP mock

Scaling with feedback: design partners and behavior tracking

We onboarded several design partners who received early access in exchange for feedback. All converted into paying customers. I tracked user sessions in Amplitude and LogRocket, which helped identify quick wins:

- Removed unnecessary links in dataset lists

- Improved loading and empty states

- Provided responsive fixes for small screens

Outcomes and impact

Since the release, the feature has become major market differentiator for DagsHub, and consistently attracts new customers to the platform. Check it out in production

- Doubled the number of paying customers within six months

- Validated a UI model aligned with domain workflow

- Converted all early design partners into committed customers

2X

customers within 6 months

+7%

increase in conversion rate

+20%

increase in engaged users